python使用opencv模块实现图片特征点匹配

1、有了surf和sfit前提,特征点匹配就有基础。

opencv提供了BFMatcher和FlannBasedMatcher两种方法进行匹配,本文先介囊汽绍BFMatcher。

BFMatcher:所有可能的匹配,寻找最佳。

FlannBasedMatcher:最近邻近似匹配,不是最佳匹配。

代码片段:

导入图片,其中是翻转过的图片

imageA = cv.imread('c:\\haitun.png')

cv.imshow("imageA", imageA)

imageB = cv.imread('c:\\haitun1.png')

cv.imshow("imageB", imageB)

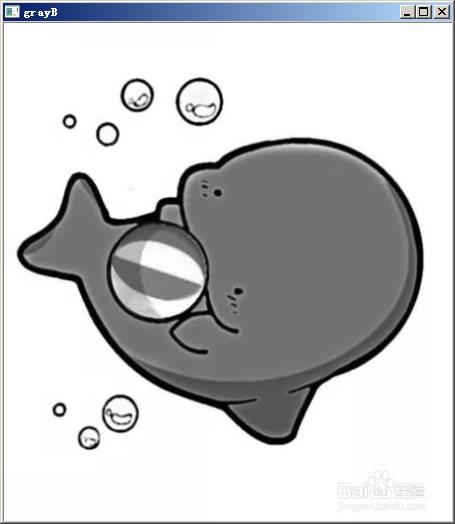

2、还是转换成灰度图

grayA = cv.cvtColor(imageA, cv.COLOR_BGR2GRAY)cv.imshow("grayA", grayA)grayB = cv.cvtColor(imageB, cv.COLOR_BGR2GRAY)cv.imshow("grayB", grayB)

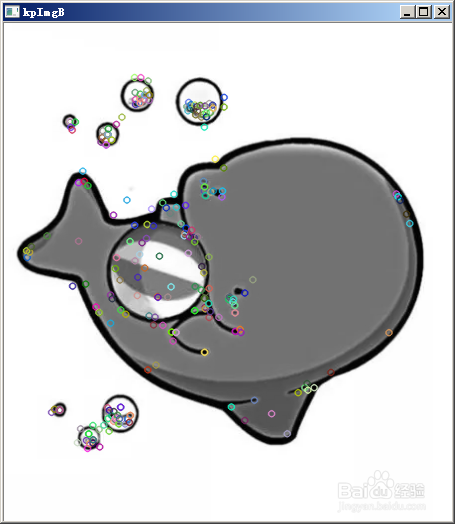

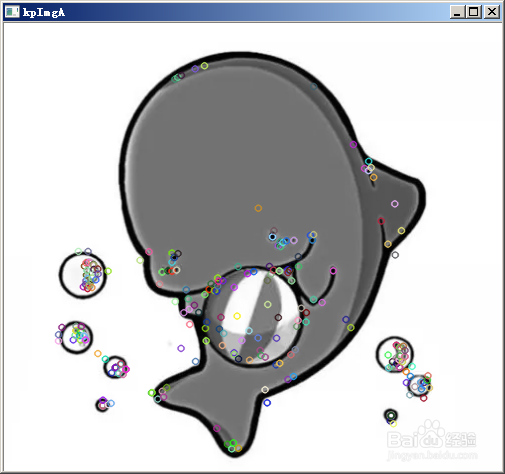

3、初始化SIFT, 此处xfeatures2d.SIFT_create使用了参数min_hessian 阈值

min_hessian = 1000sift = cv.xfeatures2d.SIFT_create(min_hessian)

# 分别计算特征点和特征描述符,此处采用sift方法

keypointsA, featuresA = sift.detectAndCompute(grayA,None)

keypointsB, featuresB = sift.detectAndCompute(grayB,None)

画特征点

kpImgA=cv.drawKeypoints(grayA,keypointsA,imageA)kpImgB=cv.drawKeypoints(grayB,keypointsB,imageB)cv.imshow("kpImgA", kpImgA)cv.imshow("悦近冷kpImgB", kpImgB)

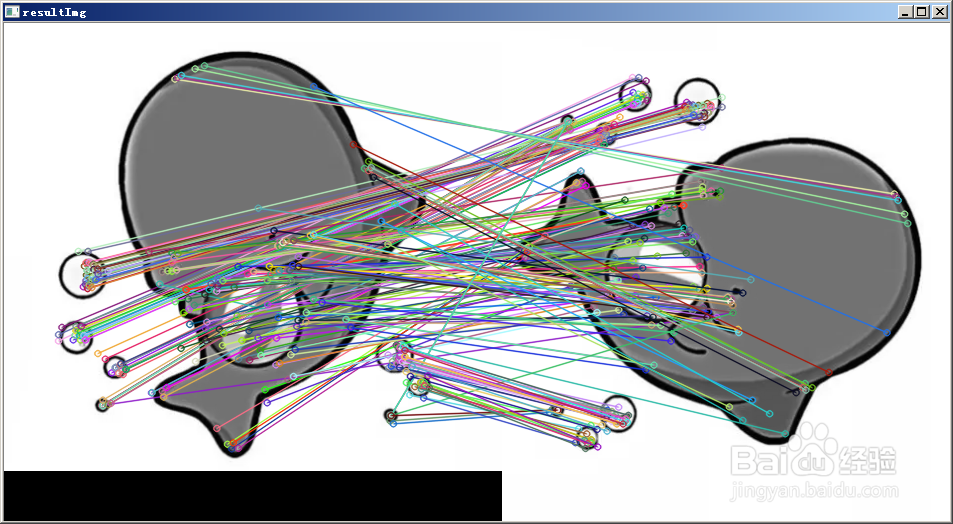

采用BFMatcher 寻找最佳匹配

bf = cv.BFMatcher()

使用knnMatch匹配处理,并返回匹配matches

matches = bf.knnMatch(featuresA, featuresB, k=2)

print(matches)

4、针对knnMatch匹配方法,创建一个列表,保存符合要求的描述。

good = []

for m,n in matches:

if m.distance < 0.75*n.distance:

good.append([m])

print([m])

用drawMatchesKnn画出匹配状态并槐吩将结果输出resultImg

一个输出灰度图 一个输出彩图

resultImg = cv.drawMatchesKnn(grayA, keypointsA, grayB, keypointsB, good,None, flags=2)

resultImg1 = cv.drawMatchesKnn(imageA, keypointsA, imageB, keypointsB, good,None, flags=2)

plt.imshow(resultImg),plt.show()

cv.imshow("resultImg", resultImg)

cv.imshow("resultImg1", resultImg1)

5、小结:

完成寻找特征点---匹配特征点过程。并验证旋转不变性的特点。

mport numpy as npimport cv2 as cvimport copyfrom matplotlib import pyplot as pltimageA = cv.imread('c:\\haitun.png')cv.imshow("imageA", imageA)imageB = cv.imread('c:\\haitun1.png')cv.imshow("imageB", imageB)grayA = cv.cvtColor(imageA, cv.COLOR_BGR2GRAY)cv.imshow("grayA", grayA)grayB = cv.cvtColor(imageB, cv.COLOR_BGR2GRAY)cv.imshow("grayB", grayB)min_hessian = 1000sift = cv.xfeatures2d.SIFT_create(min_hessian)keypointsA, featuresA = sift.detectAndCompute(grayA,None)keypointsB, featuresB = sift.detectAndCompute(grayB,None)kpImgA=cv.drawKeypoints(grayA,keypointsA,imageA)kpImgB=cv.drawKeypoints(grayB,keypointsB,imageB)cv.imshow("kpImgA", kpImgA)cv.imshow("kpImgB", kpImgB)bf = cv.BFMatcher()matches = bf.knnMatch(featuresA, featuresB, k=2)print(matches)good = []for m,n in matches: if m.distance < 0.75*n.distance: good.append([m]) print([m])resultImg = cv.drawMatchesKnn(grayA, keypointsA, grayB, keypointsB, good,None, flags=2)resultImg1 = cv.drawMatchesKnn(imageA, keypointsA, imageB, keypointsB, good,None, flags=2)plt.imshow(resultImg),plt.show()cv.imshow("resultImg", resultImg)cv.imshow("resultImg1", resultImg1)cv.waitKey(0)cv.destroyAllWindows()