Hadoop2.5.2安装与配置

1、一、SSH配置:1、首先在三台服务器上安装SSH,服务器IP地址为:192.168.217.128; 192.168.217.129;192.168.217.130sudo apt-get install openssh-server openssh-client

2、然后分别在三台服务器上执行以下命令,配置SSH免秘钥:$ ssh-keygen -t dsa -P '' -f ~/.ssh/id_dsa$ cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys输入命令:ssh localhost第一次需要输入密码,然后再次输入:ssh localhost能无密码登陆,说明配置成功

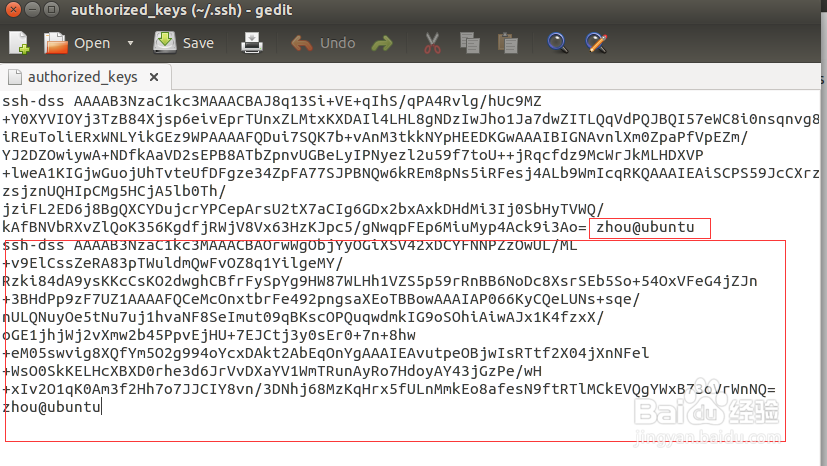

3、手动复制ubuntu2,ubuntu3~/.ssh/id_dsa.pub 文件内容添加到ubuntu1的~/.ssh/authorized_keys 文件中;或者分别执行以下代码(注:zhou是用户名):在ubuntu2中执行$ scp ~/.ssh/id_dsa.pub zhou@ubuntu1:~/.ssh/authorized_keys在ubuntu3中执行$ scp ~/.ssh/id_dsa.pub zhou@ubuntu1:~/.ssh/authorized_keys

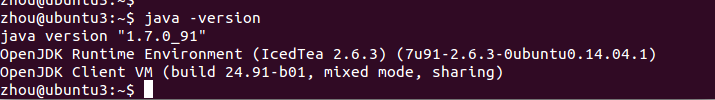

5、二、java1.7安装配置1、执行:sudo apt-get install default-jdk2、修改/etc/environment文件如下:PATH="/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:$JAVA_HOME/bin"export JAVA_HOME=/usr/java/jdk1.7.0_79export CLASSPATH=.:$JAVA_HOME/lib:$JAVA_HOME/jre/lib3、测试:java -version

9、配置etc/hadoop/mapred-site.xml<property> <name>mapredu艘早祓胂ce.framework.name</name> <value>yarn</value> </property> <property> <name>mapreduce.jobhistory.address</name> <value>ubuntu1:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>ubuntu1:19888</value> </property>

10、配置et罕铞泱殳c/hadoop/yarn-site.xml<property> <荏鱿胫协name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <property> <name>yarn.nodemanager.auxservices.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property> <property> <name>yarn.resourcemanager.address</name> <value>ubuntu1:8032</value> </property> <property> <name>yarn.resourcemanager.scheduler.address</name> <value>ubuntu1:8030</value> </property> <property> <name>yarn.resourcemanager.resource-tracker.address</name> <value>ubuntu1:8031</value> </property> <property> <name>yarn.resourcemanager.admin.address</name> <value>ubuntu1:8033</value> </property> <property> <name>yarn.resourcemanager.webapp.address</name> <value>ubuntu1:8088</value> </property> <property> <name>yarn.nodemanager.resource.memory-mb</name> <value>768</value> </property>

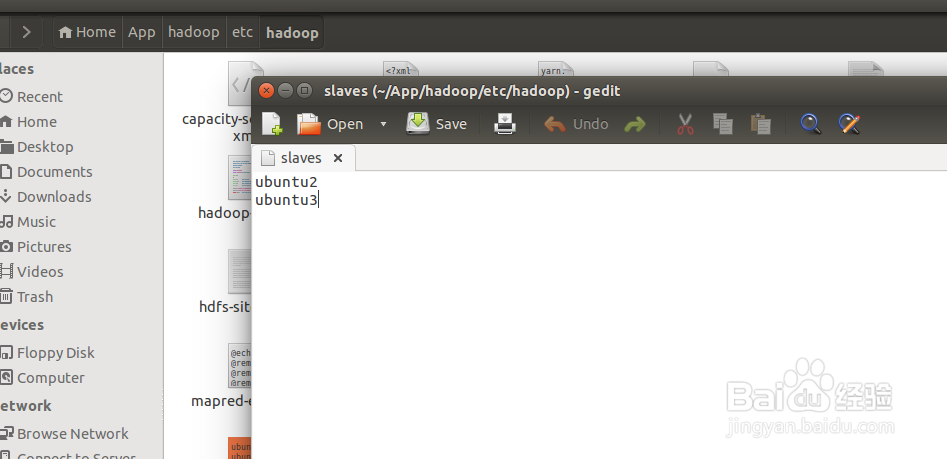

11、配置etc/hadoop/salvesubuntu2ubuntu3